Introduction

If you dabble in the world of programming, then you may be familiar with the term ‘containerization’. If not, containerization is a process that helps make applications more manageable. It works by allowing the programmer to distribute applications in organic, portable packages. Each package is lightweight and isolated, complete with its own environment. These individual packages are known as containers.

Part of why containerization is becoming such a popular option for developers is that it makes deployment super easy. Not to mention, applications become much more scalable and easier to manage. There are many tools currently available that you can use to make your applications more suited for distributed systems. One of the most popular ones among them is Docker.

Docker is a containerization platform. It is an ecosystem consisting of several useful tools that let you simplify and standardize your software development process. In this article, we will provide an overview of Docker and demonstrate how you can use it efficiently.

An Overview of Containerization Using Docker

In addition to making container management super simple, Docker is also very integrative and universal. You can use it in collaboration with a whole host of open-source projects and tools. To understand better, following is a simplified overview of how a container works in the Docker ecosystem:

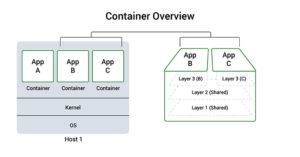

On the left, we see a concise and neatly packaged view of a container in a given host system. As you can see, Docker isolated the application as well as the resources it requires. The right part of the diagram shows a more expanded perspective of the container. This one in particular shows “layering”. Layering is when several containers use the same layers. This helps to control the amount of resources being used.

One approach many people usually take when using Docker is creating a service-oriented architecture. Here, you would divide your app into containers based on functionality. This makes the app architecture much more flexible and scalable.

You can also check out an overview of the types and uses of different Docker containers available on our PaaS platform here.

Benefits of Using Docker

As we mentioned in the previous section, Docker is a popular containerization tool because of the flexibility and scalability it offers. In addition to that, Docker also enables you to build more portable, concise, and lightweight applications that utilize minimal resources. You can also use it to standardize your containers which helps make interface interactions more predictable and reliable.

Understanding Service Discovery

The next thing you need to learn about Docker is service discovery, flow, and global configuration stores. This feature pays into the scalability and flexibility of Docker containers. Service discovery enables the container to familiarize itself with its environment without needing assistance from the administrator. The containers use this information to identify themselves and other components. The containers can subsequently be used as tools or global configuration stores.

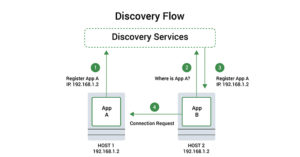

In this diagram, we see a discovery flow. We can observe that an application is using the discovery service to register its connection information. Then, it is trying to figure out how to connect to the application. Once it manages to register, other applications are able to query the discovery service to figure out how to connect to the application.

Global Distribution Stores

The role of global distribution stores is to provide configurations like HTTP API to set key values. There may also be access control and encrypted entries. The main purpose of using distributed stores is for self-configuration. This way, making new containers becomes easier. Discovery stores also provide connection data to applications for various, required services. They allow for registration of connection information, provide information pertaining to cluster members, and offer a safe, universally accessible configuration data storage location.

Service Discovery Tools

To better understand, here are some of the most commonly used service discovery tools and projects:

- etcd, consul, and zookeeper are service delivery or global distributed key-value stores.

- crypt is a project that helps encrypt etcd entries.

- confd monitors any changes in the key-value store and starts reconfiguration.

Networking Tools Provided by Docker

As we talked about in a previous section, Docker helps create service-oriented designs. This is a special feature of containerized applications. We essentially break down and divide an application into multiple components based on functionality. Containers can communicate and network with other containers as well as with the host. For this purpose, Docker provides many basic networking tools. These are important to ensure that each component is performing the functions it is designed for.

The in-system networking tools for containers in Docker work in either of two ways. First, you can opt for external routing. To do this, you will have to open the ports of the container and manually map them to the host. You can also let Docker choose a port for you randomly. On the other hand, you can use something called “links” to connect containers. They are especially useful if you want containers to connect automatically. When linked, containers have each other’s connection information.

This is just a very basic level of networking with containers. Docker actually supports complex networking with high functionality in highly monitored environments. It allows you to overlay networking, employ the use of VPNs, assign subnetting, set up macvlan communication interfaces, as well as configure custom gateways and MAC addresses for your containers.

Docker Networking Projects

Following are a few popular networking projects in Docker:

- flannel is an overlay networking that gives each host an individual subnet.

- weave is an overlay network that puts all containers on one network.

- pipework is an advanced networking toolkit that assists with advanced configurations.

Scheduling and Managing Clusters

Lastly, we will explore how you can deal with scheduling, cluster management, and orchestration in Docker.

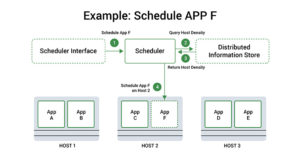

A scheduler is a component that is helpful with clustered container environments. They allow you to start containers on any hosts that are available. Let’s observe how a simple scheduling decision would play out:

First, the request goes through a management tool to the scheduler. The latter checks the request and the hosts available. It then uses a discovery service to connect the application to a host. The selected host is typically the one that is the least busy or occupied. But host selection is not the only job a scheduler performs. It can also load containers in the host, start and stop them, as well as manage the whole life cycle.

This process can also be automated. In such a case, the admin could specify constraints like scheduling containers on the same host or different hosts specifically. Other constraints include putting the container on the least busy host, a host with matching labels, or all hosts in a cluster.

Orchestration comes into the picture with cluster management. It is a combination of scheduling for containers and management for hosts.

Schedulers and Management Tools

Next, here are some of the most popular projects that function as schedulers and fleet management tools:

- fleet, marathon, and swarm are schedulers and cluster management tools.

- mesos is a host abstraction service that consolidates host resources.

- kubernetes is an advanced scheduler that you can use to manage container groups.

- compose is an orchestration tool.

Conclusion

Hopefully, this guide gives you the essential knowledge you need to get started with Docker. The ecosystem is quite straightforward to use but allows you to dabble in more complex functions as well. The platform enables you to introduce great levels of scalability, flexibility, and simplicity into your applications.

For more resources on Docker on our blog, you can check out the following:

- How To Share Data Between a Docker Container and a Host

- How to install & operate Docker on Ubuntu in the public cloud

- Installing and Setting up Docker on CentOS 7

- Deploying Laravel, Nginx, and MySQL with Docker Compose

- Clean Up Docker Resources – Images, Containers, and Volumes

Happy Computing!

- Removing Spaces in Python - March 24, 2023

- Is Kubernetes Right for Me? Choosing the Best Deployment Platform for your Business - March 10, 2023

- Cloud Provider of tomorrow - March 6, 2023

- SOLID: The First 5 Principles of Object-Oriented Design? - March 3, 2023

- Setting Up CSS and HTML for Your Website: A Tutorial - October 28, 2022