Introduction

High Availability Proxy (HAProxy), is a popular open-source proxying and TCP/HTTP load balancer solution able to run on Solaris, FreeBSD, and Linux. It is most commonly used to enhance the reliability and performance of a server environment by providing a balanced distribution of workload across multiple servers. This type of tool is used in many high-profile environments like Instagram, GitHub, Twitter, and Imgur.

This guide will introduce you to HAProxy, acquaint you with the load-balancing terminology, and provide examples of how it could be leveraged to bolster both the performance and reliability of server environments.

Essential HAProxy Terms

Before getting into the details of load balancing and proxying, there are some important terms and concepts to become familiar with. We will begin by reviewing these concepts in the following sections.

ACL (Access Control List)

When it comes to load balancing ACLs are utilized for testing a particular condition and performing an action based on the result. This affords the capability to forward traffic optimally based on factors such as backend connections and pattern matching, as well as numerous others. Here is an example of ACL in use:

|

1 |

acl url_blog path_beg /blog |

In this case, the ACL is a match if the user’s requested path begins with /blog. For example, this match request would point to http://yourdomain.com/blog/blog-entry-1. The HAProxy Configuration Manual contains a detailed guide to ACL usage.

The Backend

Forwarded requests are received by a set of servers referred to as the backend. The requests are defined in the backend section of the HAProxy configuration. In the most basic terms, a backend can be defined by what load balance algorithms to use and a listing of ports and servers. A backend could consist of a singular server or multiple servers. As more servers are added to the backend, the potential load capacity is increased, with processing spread across multiple servers. If some of the backend servers go offline, the others will serve as backups for dealing with the request processing.

Let’s look at an example of a configuration of two backends. In this case, they are a blog-backend and a web-backend. Each has two web servers, listening on port 80:

|

1 2 3 4 5 6 7 8 9 10 |

backend web-backend balance roundrobin server web1 web1.yourdomain.com:80 check server web2 web2.yourdomain.com:80 check backend blog-backend balance roundrobin node http server blog1 blog1.yourdomain.com:80 check server blog2 blog2.yourdomain.com:80 check |

The line balance roundrobin is intended to specify the load balancing algorithm. The details can be found in the upcoming Algorithms for Load Balancing section, while mode http is setting up the use of layer 7 proxying. We will explain this in the Load Balancing Types section. Also, the check option after the directiveness of servers indicates that health checks will be triggered on those particular backend servers.

The Frontend

The definition of how requests are forwarded to the backend is referred to as the frontend. The requests are defined in the frontend section of the HAProxy configuration. They are composed of ACLs, a port, a set of IP addresses, and a rule defining which backends to use depending on which ACL conditions were met called the use_backend rule. Additionally, a default_backend rule also exists to account for any other case. The next section will explain how a frontend can be configured to various network traffic types.

Load Balancing Types

With the basic components used for load balancing established, we can now move on to the basic types of load balancing.

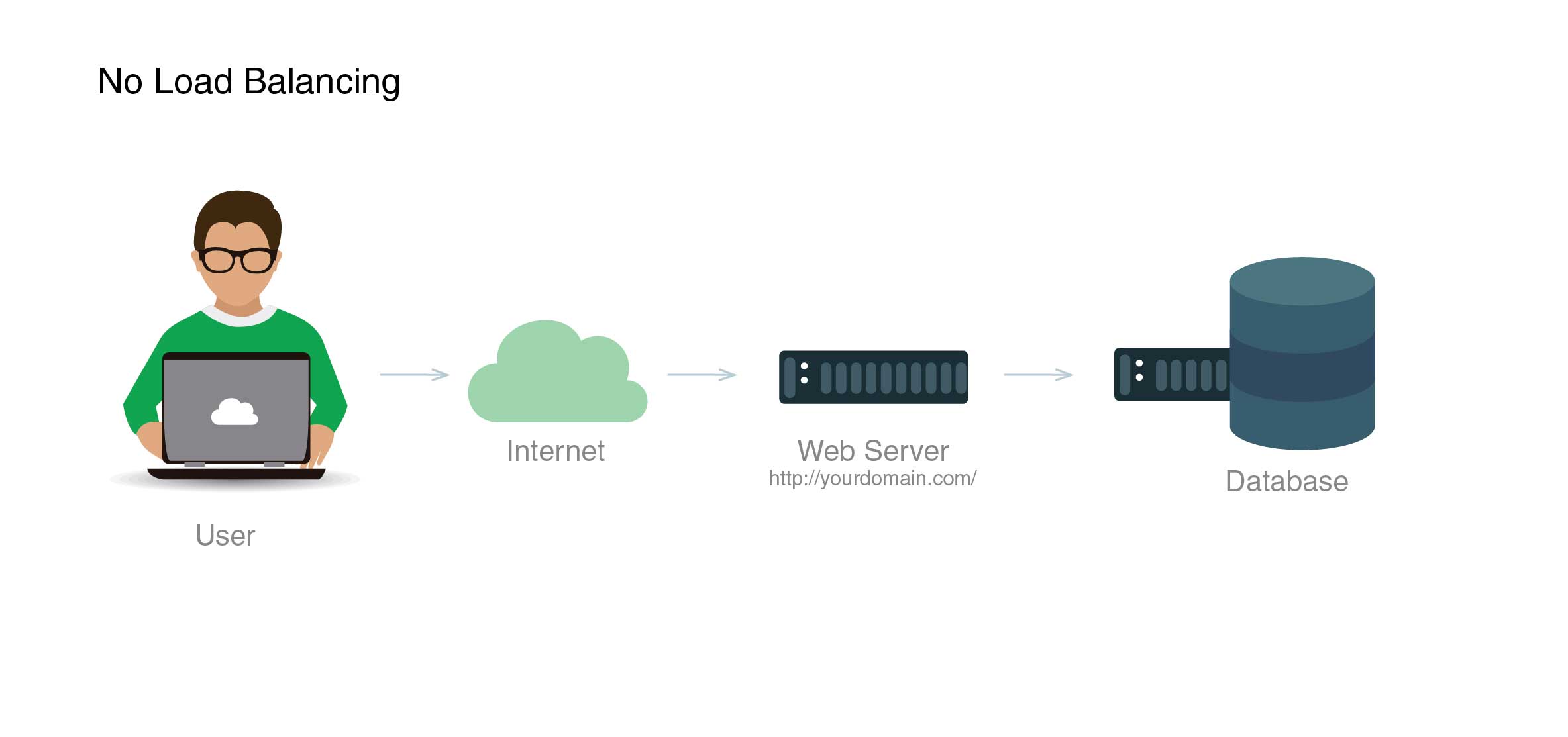

No Load Balancing

In its most rudimentary form, a lack of load balancing can be illustrated as the following:

In this scenario, a user directly connects to the web server, at yourdomain.com. There is no load balancing present. As there is only one database server, if it goes offline, the access to the information on it is now completely cut off. If many users are trying to connect to a singular web server simultaneously, and it is unable to handle the load that exerts, all of the connections will slow down or fail to connect entirely.

Load Balancing (layer 4)

One of the simplest, most pragmatic ways of balancing network traffic to multiple servers is by using transport layer or layer 4 balancing methods. This manner of load balancing directs any connecting user based on the IP range their IP address falls into and port. In other words, if http://yourdomain.com/anything is where the request comes from, the backend that is defined to handle these requests will be what ultimately handles them. It will forward those requests for yourdomain.com on port 80.

The basic formation of layer 4 load balancing looks like this:

As the user gains access to the load balancer, their requests are forwarded to the web-backend server group. The configured backend server will directly respond to the user’s request. To avoid having the user encountering inconsistent data, all of the web-backend servers should serve identical content. As per the diagram above, both web servers ultimately link back to the same database server.

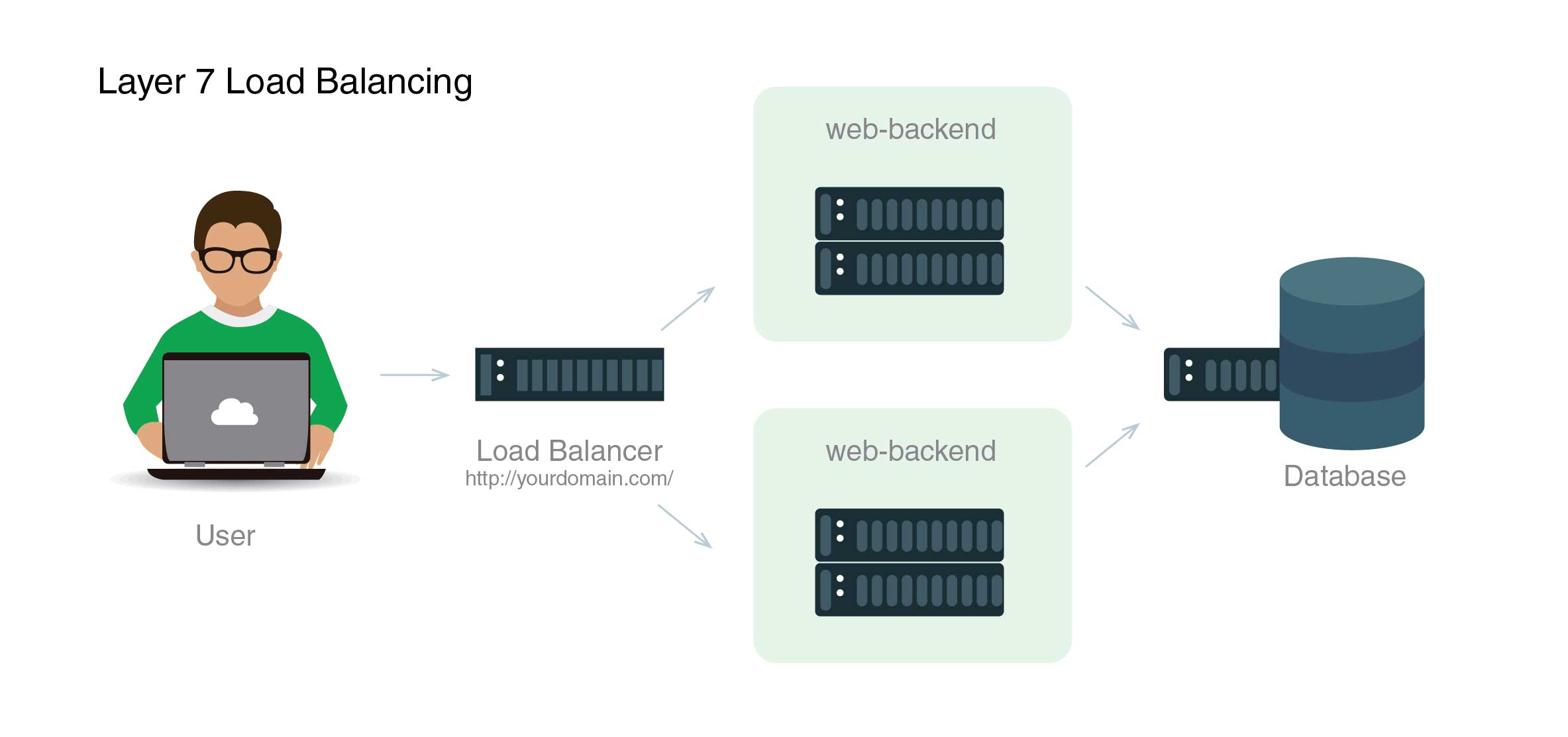

Load Balancing (Layer 7)

There is another, more complex method to load balance network traffic. That is by using level 7, or application layer, load balancing. This approach allows user requests to be forwarded to different backend servers depending on the content of user requests. This method permits load balancing to happen across multiple web application servers through the same port and domain. For more details on this layer, take a look at the HTTP subsection of our The Nitty Gritty of Networking: Learn about Terminology, Interfaces, and Protocols tutorial.

The following diagram illustrates layer 7 load balancing:

In this case, a user requests yourdomain.com/blog, and their request is forwarded to the blog backend. This is a backend server set specifically allocated to running the blog application. In the meantime, other requests will be forwarded to the web-backend. However, both backends result in accessing the same database server.

An example of a small piece of frontend configuration for layer 7 load balancing would look something like the following commands. They configure the http frontend to handle incoming traffic through port 80:

|

1 2 3 4 5 6 7 8 |

frontend http bind *:80 node http acl url_blog path_beg /blog use_backend blog.backend if url_blog default_backend web.backend |

If the path of the user’s request begins with /blog, the acl url_blog path_beg /blog will match the request.

use_backend blog backend if url_blog proxies the traffic to blog-backend by utilizing ACL.

defaut_backen web_backend directs all other traffic forwardings to web-backend.

Algorithms for Load Balancing

When load balancing is being performed, it is the load balancing algorithm that defines which backend server will be selected for this purpose. There are several algorithm options offered by HAProxy. It is additionally possible to assign a weight parameter to the servers in order to manipulate how often one server is selected by contrast to others. There are simply too many algorithms available to describe them all. Therefore, this guide will only focus on the most common ones. You can refer to the HAProxy Documentation Converter to see the full list. The most commonly used ones include:

- roundrobin: Тhe default algorithm that selects servers in turn.

- leastconn: Тhe server with the least connections on it is automatically selected. However, those servers within the same backend should be rotated in a round-robin fashion.

- source: The algorithm chooses the server based on the IP address that is sourcing the user request. It is a method of ensuring that the user will always connect to the same server.

Sticky Sessions

For some applications, it is a requirement that users that connect do so by always linking to the same server. Through ‘sticky sessions’ and using the appsession parameter in the backend requiring it, such persistence can be achieved.

Processing Health Checks

HAProxy needs a method by which it can determine a backend server’s ability to process requests. This takes the place of removing a server from the backend if it goes offline. There is a default ‘health check’ run that tries to establish a TCP connection. It does that by listening on the configured IP address and port.

If the health check for the server does not pass, the server is unable to process sent requests. At that point, the server is disabled automatically in the backend, with traffic no longer being forwarded to it until it is back up and running (healthy). However, in certain cases, determining the server’s health via the default health check proves insufficient.

Alternate Solutions

HAProxy might prove to be too sophisticated for what your particular needs are. In that case, there are a couple of good alternatives that could prove to be more efficient:

- Nginx: This is a reliable and fast web server that can be leveraged for load balancing and proxy purposes. In fact, Nginx is commonly used in tandem with HAProxy which utilizes its compression and caching capabilities.

- Linux Virtual Servers (LVS): This is a simple, layer 4 load balancer that is included on many Linux systems.

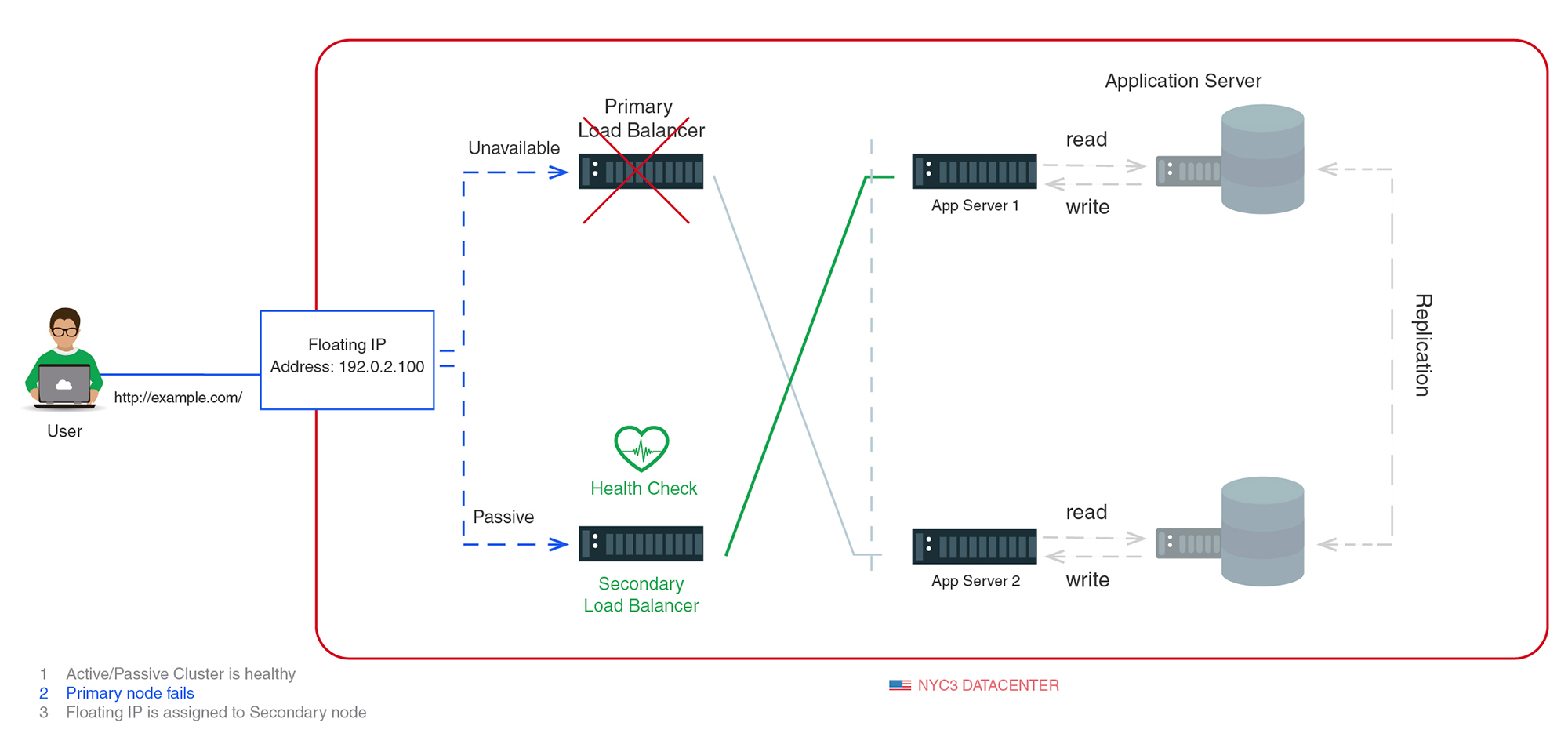

High Availability

So far, we have talked about layer 4 and layer 7 load balancing. Both of them utilize a load balancer to determine which of the many backend servers will be tasked with responding to the user’s request. But it is important to keep in mind a load balancer’s limitations. Namely, that it’s a single point of failure. This means that if it were to go down, or gets overburdened with user requests, it will result in downtime or latency of request processing, respectively. However, an HA (high availability) setup presents an infrastructure lacking any one single failure point. This prevents downtime events from being experienced due to server failure by introducing redundancy at every level of the system’s architecture. While the load balancer will help facilitate the backend’s redundancy, the load balancers need to exercise redundancy as well.

The following diagram exhibits a basic form of a high availability setup:

This infrastructure has several load balancers (one active, the rest passive) tied to a static IP address. This IP address can be remapped to a different server if the situation requires it. The user request travels through the external IP address to the load balancer currently active. If the load balancer is offline at the time, the failsafe mechanism will detect its state, reassigning the IP address to the passive server(s).

Conclusion

The fundamental understanding of load balancing and knowledge of some of the ways that HAProxy can fulfill the load balancing needs for your system should give you a solid foundation in getting started on optimizing the reliability and performance of your current server environments. You can also check out our tutorial Nginx HTTP Proxying, Load Balancing, Buffering, and Caching: an Overview to learn more about the load balancing properties of Nginx.

Happy Computing!

- How To Enable, Create and Use the .htaccess File: A Tutorial - March 8, 2023

- An Overview of Queries in MySQL - October 28, 2022

- Introduction to Cookies: Understanding and Working with JavaScript Cookies - October 25, 2022

- An Overview of Data Types in Ruby - October 24, 2022

- The Architecture of Iptables and Netfilter - October 10, 2022