Introduction

Technology and the internet have become central presences in our regular, academic, and professional lives. That is why the sheer number of websites and applications that exist concurrently does not come as a surprise. If you are a business, you would want to have an associated web platform. An application enables you to market and deliver your services to your target customers with ease.

Regardless of the reason you are creating a web application, you need to determine how to build it. There are many options at your disposal when it comes to choosing the best server setup. The server architecture you opt for will determine how you run and manage everything in your environment. That is why the decision must be made after careful consideration.

How to Choose the Right Server Setup

So how do you decide which architecture is ‘right’ for your application? To do so, you need to first think about what the requirements for your web application are. There must be certain features that you have to incorporate for it to work efficiently for your specific use case. For example, maybe you are striving for an application that is easy to scale. Or, maybe you need your application to work smoothly over browsers as well as mobile devices. At the same time, your budget may be your primary concern as well.

Regardless of what your requirements are, you should know that you can create a custom solution for your application. In this tutorial, we will explore the various types of servers that many people commonly use for their web applications. We will talk about the various use cases and when a certain setup is best to be used. To help you decide if it is suitable for you, we will also give you some pros and cons of each server architecture.

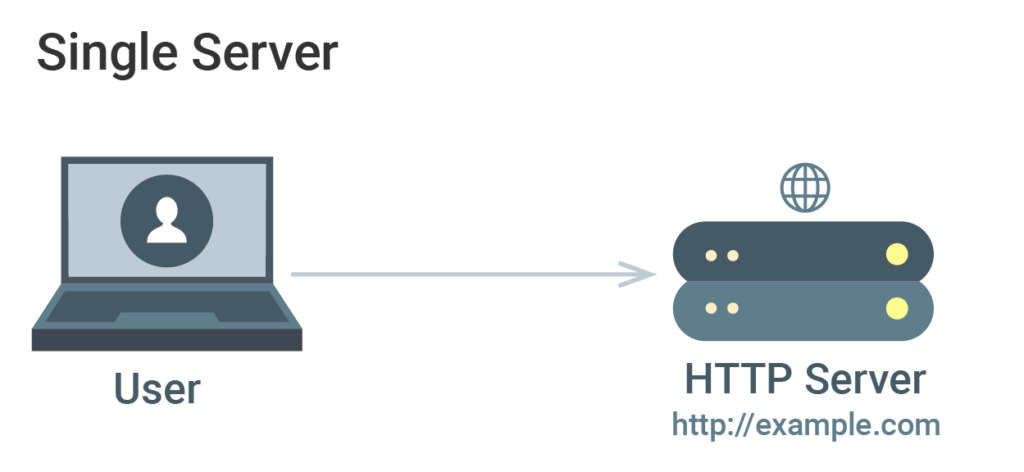

1. Everything on One Server

As suggested by the name, you load the entire environment on one, singular server. The environment would include your web server, your application server, as well as the database server. For example, it works on the Linux, Apache, MySQL, and PHP (LAMP) stack configuration. You can follow our tutorials on how to install the LAMP stack on an Ubuntu server and how to install the LAMP stack on CentOS.

When to Use It:

This type of arrangement works best if you are short on time. It is simple and quick to set up. That is why it works for simplistic web applications.

Benefits:

- Simple and easy to understand and implement.

- Takes little time to set up in its entirety.

Disadvantages:

- Does not allow for horizontal scalability.

- Offers very little in terms of component isolation.

- Application and database are essentially competing for the same resources since they are on a single server.

- As a result, you may experience poor performance.

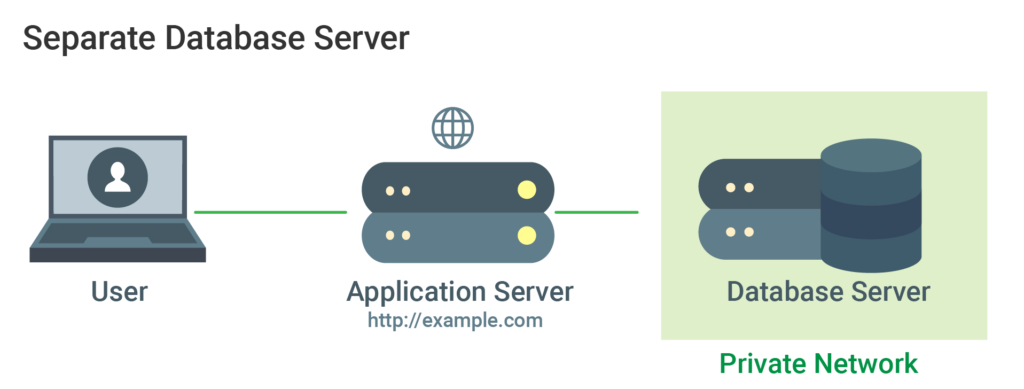

2. Separate Database Server

The main issue with using a single server is the competition for limited resources. This setup aims to resolve that problem. Here, the database management system, or the DBMS, is kept separate from the application server. The database server is in a private network and has its own resources. This results in better performance and increased security.

When to Use It:

Again, if you want to deploy a quick setup, this is pretty simple to configure. It is the ideal solution if you are worried about the database and the application competing over the same resources.

Benefits:

- Separate, dedicated system resources for the application and database each, including CPU, memory, I/O, etc.

- More potential for scalability in either of the application and database tiers.

- You can add and remove resources as you need.

- If you remove the database from the public internet, you can amp up your security as well.

Disadvantages:

- A bit more complex than a single server setup.

- Low bandwidth or high latency network connection between the two servers can produce performance issues.

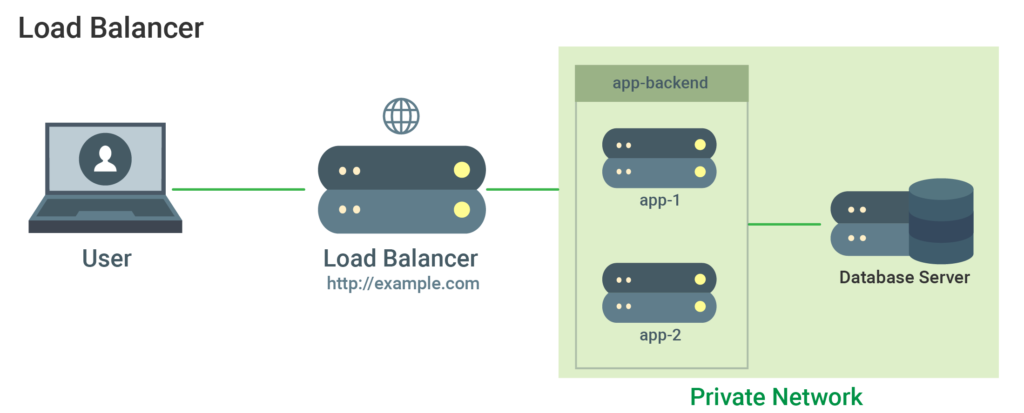

3. Reverse Proxy or Load Balancer

This is where load balancers come into the picture. Load balancers are typically used in server environments to improve performance and reliability. They do this by ‘balancing the load’; i.e distributing the workload across an array of servers.

When to Use It:

Load balancers are extremely useful for when you need to perform horizontal scaling. Horizontal scaling basically means adding more servers to the environment. You can also use an application layer reverse proxy to serve several applications at once using one domain and port. HAProxy, Nginx, and Varnish are examples of software that permit reverse proxy load balancing.

Benefits:

- In case one server in the line fails, the other servers compensate for its function by balancing the workload.

- Allows you to perform horizontal scaling to increase or decrease the capacity of the environment.

- It also limits client connections which offers protection against DDOS attacks.

Disadvantages:

- In case the system resources are not sufficient, the load balancer may limit the performance of your application.

- Proper configuration is needed to ensure proper performance.

- Significantly more complex than single server or separate server setups.

- You need to take into account factors such as SSL termination and applications that need sticky sessions.

- The major point of concern in using load balancers is that it is a single point of failure. This means that if the load balancer fails to work, your entire service will go down.

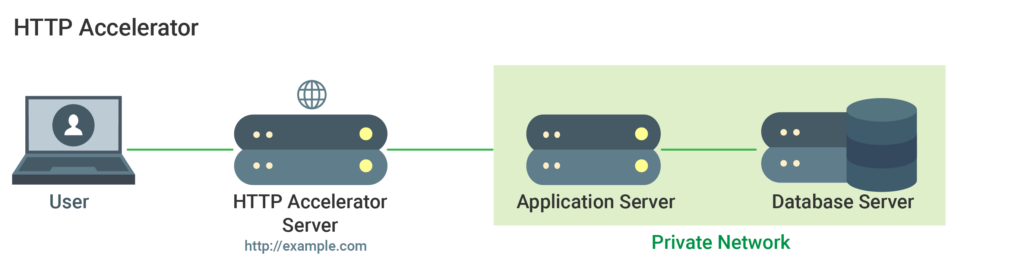

4. HTTP Accelerator or Caching Reverse Proxy

This is a setup that you can use to increase the speed with which you deliver content to a user of your application. It employs various techniques to reduce this time. The most important one is by caching the response from the application server. The accelerator saves the content in its memory when a user requests for it the first time. Therefore, when any similar future requests come in, it serves the content quickly without interacting with the application server. All of Nginx, Varnish, and Squid are capable of HTTP acceleration.

When to Use It:

Understandably, this setup is most suitable for files and content users request very frequently. It also works very well for dynamic web applications that are content-heavy.

Benefits:

- Caching and compression significantly increase the speed of the application and request processing.

- Decreasing load on the CPU also improves site performance.

- You can also use this as a reverse proxy load balancer.

Disadvantages:

- You have to tune it well to extract its best performance.

- You may experience poor performance in case the cache-hit rate is low.

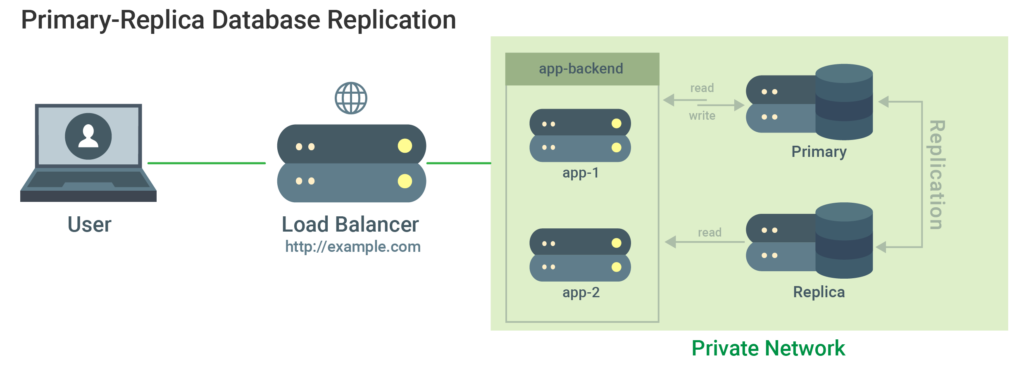

5. Primary-Replica Database Replication

A primary-replica database replication setup is typically very useful for systems that perform more reads than writes. For example, content management systems can really leverage such an architecture. You need one primary and one or more replication nodes for replication. It distributes the reads across all of the nodes. The updates go only to the primary node.

When to Use It:

Like we mentioned, a replication-based database setup helps improve the read performance of a system. You can use it for applications like CMS.

Benefits:

- It improves the read performance of the database as it spreads it across the replicas.

- If you use the primary node for just updates, you can also improve write performance.

Disadvantages:

- Any application that tries to access the database has to be able to decide which node to send updates and read requests to.

- In case the primary replica fails, updates stop. You have to resolve the issue to allow updates to continue.

- There is no failover mechanism to accommodate potential primary node failure.

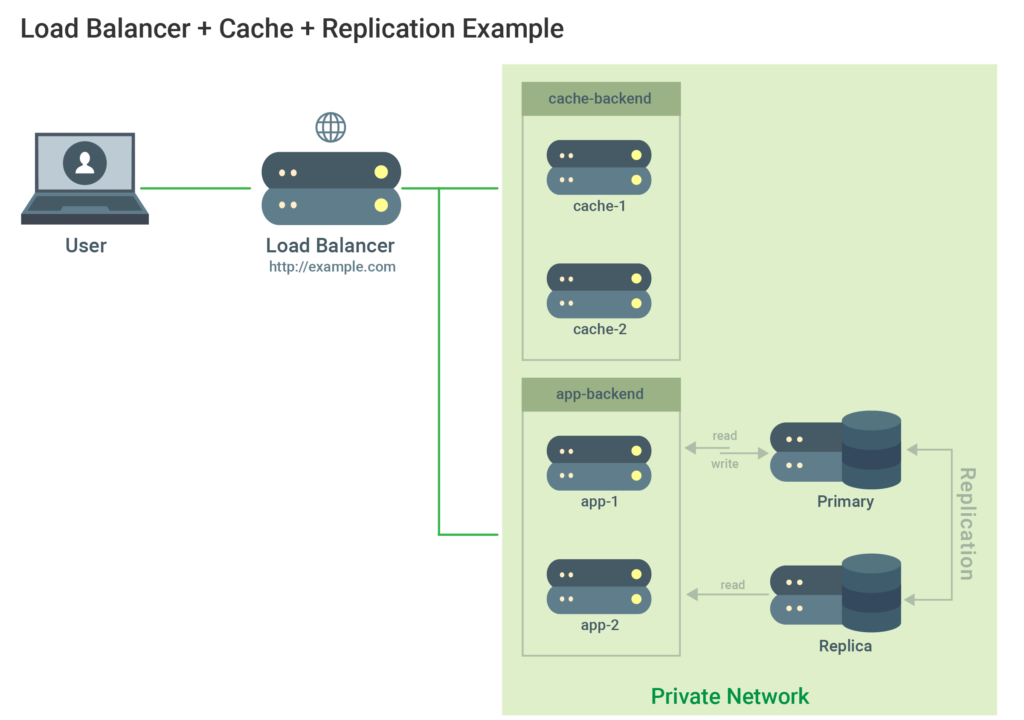

Using the Server Setups in Combination

Luckily, it is also possible for you to combine various techniques to get the desired outcome. This means that you can load balance the application servers with the caching servers in a single environment and replicate the database. Doing so allows you to capitalize on the functionality of both servers. It does not, however, make the setup any more complicated or troublesome.

Example:

We will try to understand such an environment with an example:

In such an environment, the load balancer will send static requests to the caching servers. Static content includes the likes of CSS, images, and Javascript among others. It will direct any other kind of content requests to the application servers instead.

Let’s say a user is requesting some static content from the environment. Here is what would take place:

- The load balancer will first determine whether the content is cache-hit or cache-miss. Cache-hit content is present in the cache while cache-miss content is not there. It does so by checking with the cache-backend.

- In case it is cache-hit, the load balancer sends the content to the user.

- In case it is cache-miss, the cache server forwards the request to the backend of the application.

- The app-backend will find and send the content from the database.

- The cache-backend receives the content from the load balancer. It also caches this content before returning it to the load balancer.

- The latter then forwards the response to the user.

On the other hand, here is what will happen if the user requests dynamic content:

- The request will come in from the user to the load balancer.

- This request comes to the app-backend.

- The app-backend locates the requested content and returns it to the load balancer.

- The user receives the content.

One of the major benefits of such a combined environment is that it is more reliable. Not only that, but it also has superior performance capabilities. There are, however, still two single points of failure- the load balancer and the primary database server.

Conclusion

You can use each server setup on its own in your environment. On the other hand, you could also combine a couple of them together to create a personalized solution. There is no ‘correct’ answer. It all depends on the functionality you wish to extract from the architecture.

Having foundation-level knowledge about how each server setup works will help you make the decision for your own application. The best thing to do is to start off small and simple. You can keep increasing the complexity of your setup as you gain experience.

Happy Computing!

- How To Create a Kubernetes Cluster Using Kubeadm on Ubuntu 18.04 - June 24, 2021

- Nginx Server and Location Block Selection Algorithms: Overview - May 14, 2021

- Configuring an Iptables Firewall: Basic Rules and Commands - April 5, 2021

- How to Configure a Linux Service to Auto-Start After a Reboot or System Crash: Part 2 (Theoretical Explanations) - March 25, 2021

- How to Configure a Linux Service to Auto-Start After a Reboot or System Crash: Part 1 (Practical Examples) - March 24, 2021